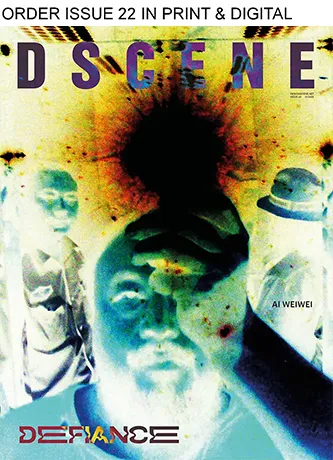

The landscape of the art direction studio has changed. Where walls were once covered with pinned magazine clippings and fabric swatches, screens now display endless streams of visual iterations generated in seconds. For the fashion and design industry, artificial intelligence is no longer a futuristic curiosity; it’s a fully-fledged studio collaborator.

Far from replacing the human eye, generative AI is entering the “messy middle” of the creative process—that chaotic phase between a pure idea and the final execution. Creative directors (CDs) now use these tools to explore compositions, test impossible lighting, or expand sets, thereby redefining the standards of editorial visual production.

The essentials: Key points

- From concept to image, accelerated: Creative directors use AI to validate artistic directions in minutes rather than days, drastically reducing pre-production costs.

- Hybrid techniques: The dominant use is not “all AI,” but a hybrid workflow mixing traditional photography, expert retouching, and generative expansion for rich and complex editorial visuals.

- Democratization of tools: The accessibility of these technologies allows small studios to compete with major houses in terms of visual quality, as highlighted by recent analyses of AI’s impact in design.

From “Mood” to “Mode”: The Acceleration of Pre-Visualization

The first revolution brought by AI to design studios concerns the pre-visualization phase. Traditionally, a creative director would spend hours searching for existing references to communicate a mood. Today, AI allows for the instant materialization of an entirely new intention.

Current tools do more than just blur the line between real and virtual; they act as a search engine for the imagination. Creatives don’t ask AI to do the final job immediately, but to propose variations on a theme—a “golden hour” lighting on a velvet texture, or brutalist architecture integrated into a tropical forest.

★★★★★ More Than Just an AI Photo Generator

“Beyond generation, Getimg AI offers editing tools like canvas painting and background extensions. It integrates creative control similar to how Quillbot AI assists writers by offering stylistic choices and tone refinement during content editing.” (Review published by Karen Hao from Digital Software Labs on april 9th, 2025)

This ability to iterate quickly transforms the dialogue with clients. Instead of saying “imagine this,” the creative director can show ten variations of “this” in real time. It represents a massive reduction in creative risk.

High-fidelity prototyping and realism

In this quest for precision, the choice of tool becomes strategic. While some software is known for its strong artistic biases, others focus on photographic fidelity. For editorial use, the credibility of skin texture or the drape of a garment is non-negotiable.

This is where modern workflows distinguish themselves. Creatives are turning to solutions capable of interpreting complex prompts with photorealistic precision. For those looking to integrate these technologies, using a realistic AI generator becomes a crucial step in moving from abstract concept to a usable mock-up.

These tools allow for defining not only the subject but also the technical parameters of the virtual shot (focal length, aperture, film type), offering a visual vocabulary that creative directors already master.

Key takeaway: AI-powered pre-visualization isn’t a draft; it’s a high-fidelity prototype. It allows for the validation of bold concepts without incurring physical production costs, serving as an immediate visual proof of concept.

Orchestrating the Visual: Control vs. Chaos

Text-to-Image generation opened the door, but it’s precise control that allows professionals to stay in the room. One of the major challenges for creative directors has been the randomness of early AI models. “Happy accidents” are acceptable for art, but not for a rigorous brand campaign.

Mastery through custom models

How can one ensure a generated image carries a brand’s visual DNA? The answer lies in model customization (Fine-tuning).

The most advanced agencies no longer rely solely on public base models. They train their own small instances on the brand’s archives. In this context, getimg’s open architecture offers appreciated flexibility, allowing creators to use models like Stable Diffusion XL while injecting specific LoRAs (Low-Rank Adaptation). This enables, for example, generating visuals that respect a seasonal color palette or a signature lighting style, something more complex to lock down on closed systems like Midjourney or DALL-E 3.

★★★★★ Image quality & generation speed

“Getimg.ai produces high-quality images that capture both realism and creativity. Colors are vibrant, details are crisp, and the AI handles complex prompts surprisingly well.” (Review published by Sophie from Lovart on december 15th, 2025)

Meanwhile, players like Adobe Firefly are positioning themselves on commercial safety, reassuring legal departments, although sometimes at the cost of creative boldness. Krea, on the other hand, appeals with its real-time upscaling and transformation capabilities, ideal for live design.

Comparative approaches for editorial use

Here’s how creative directors segment their tools based on production needs:

| Tool / Platform | Main Strength for Editorial | Ideal Use Case | Note on Workflow |

|---|---|---|---|

| getimg | Customization & Speed | Creating coherent campaigns via custom models (LoRA) and real-time editing. | Allows fine-grained control with ControlNet (sketch guidance) and In-painting. |

| Midjourney | Artistic Aesthetic | “Moodboard” visuals, conceptual covers, dreamlike inspiration. | Good for style, but sometimes difficult to “tame” for specific brand elements. |

| Adobe Firefly | Workflow Integration | Image extension (Generative Fill) and quick retouching in Photoshop. | Fluid for Creative Cloud suite users, focused on rights security. |

| Krea AI | Interactivity | Resolution enhancement and instant generation (Latent Consistency Models). | Used for refining 3D renders or sketches on the fly. |

Key takeaway: There is no single tool. The modern creative director acts as an orchestra conductor, selecting the platform (getimg, Midjourney, etc.) based on whether the priority is brand consistency, artistic exploration, or legal safety.

Expanding the Frame: Out-painting and Edited Realities

The use of AI is not limited to creation ex nihilo. A large part of editorial work involves adapting existing visuals to the multiple formats of social media and print. Here, the technique of out-painting (image extension) has become indispensable.

Adapting the format without losing the essence

Imagine a fashion photo tightly framed in portrait for a cover. For the web banner, a wide landscape format is needed. Previously, this required destructive cropping or a complex reshoot. Today, AI tools contextually generate the missing scenery—the continuation of a Parisian street, the extension of a photo studio—with perfect lighting consistency.

This flexibility addresses efficiency concerns discussed in other industry analyses. For instance, workflow optimization is central to Design Scene’s article on Filmora AI Portrait Cutout, which shows how AI simplifies complex trimming for content creators. Similarly, getimg’s ability to manage large, infinite canvases allows art directors to compose complex narrative scenes by assembling and extending multiple generated or photographed elements.

Inclusivity and synthetic diversity

Another crucial aspect is the ability to inject diversity into editorial visuals. Brands are under (legitimate) pressure to represent a pluralistic world. AI, when guided by professionals aware of biases, allows for adjusting virtual castings to better reflect this reality, or to create scenes that celebrate diverse cultures without clumsy appropriation.

However, this requires constant ethical vigilance. The goal is not to replace real models but to enrich visual storytelling. As explored in the article on Txema Yeste’s Dreamscapes, AI can serve to democratize dreamlike and artistic visions, allowing creators to push the boundaries of traditional fashion photography toward something more poetic and inclusive.

Key takeaway: Out-painting and generative editing allow for the recycling and adaptation of visual content for all distribution channels without reshoots, thus optimizing both budget and the carbon footprint of productions.

Frequently Asked Questions: AI in Editorial Workflows

Does using AI for editorial visuals raise copyright issues?

This is a complex question that is evolving rapidly. In a professional context, creative directors favor platforms that clarify the ownership of generated images. With professional subscriptions on platforms like getimg, the user generally holds the commercial rights to the images created, which is essential for publication. However, transparency with the audience (mentioning that the image is AI-generated) is becoming an ethical and sometimes legal standard.

Can AI maintain the consistency of a face across multiple images?

Yes, this is one of the major recent advancements. Using techniques like training custom models or using “Face Reference” and ControlNet, it is possible to generate the same character in different poses, outfits, or environments. This is a key feature for editorial storytelling and fashion.

Are AI tools replacing fashion photographers?

No, the industry consensus is that AI is an additive tool. It often replaces generic stock photography or allows for the creation of impossible sets, but for high fashion and celebrity portraits, the photographer’s eye and human connection remain irreplaceable. AI primarily intervenes in post-production or during the concept phases.

What is the learning curve for a creative director?

It is relatively short for the basics (text prompts), but mastery takes time. Understanding how to “talk” to the machine (prompt engineering) and how to use advanced parameters (seed, steps, guidance scale) distinguishes amateurs from experts who get the most out of powerful tools like those on getimg or Midjourney.

The adoption of artificial intelligence by creative directors does not mark the end of human creativity, but its amplification. By shifting from mere technical execution to curation and visual orchestration, creatives are pushing the boundaries of what is possible in editorial. Whether for speed of ideation or precision of the final render, tools like getimg are progressively establishing themselves as the new standards of the digital studio, alongside cameras and sketchbooks.